20 June 2024

Case Study

HyperGAI trains its HPT models with SMC for sustainable AI innovation - Case Study

HyperGAI is an applied AI company that specialises in Generative AI technology and foundation models. Founded in Singapore, they are developing a new kind of large language model (LLM) that can understand multimodal inputs like text, images, videos, and more, unlike traditional text-only LLMs.

This is achieved through a novel pretraining framework known as a “Hyper Pre-Trained” (HPT). Invented by HyperGAI, this framework allows for the development of large multimodal foundation models that can understand various types of data in an efficient and scalable manner.

An initial challenge for HyperGAI was to develop foundation models to understand and create personalised content from various types of inputs. To achieve that, they needed to train LLMs that could comprehend any kind of input and generate personalised content in any output mode.

Scalability with sustainability

Training these models calls for fast, cost-effective GPU clusters with scope to grow in the future. Deployed in 2023, SMC’s H100 GPU clusters within the Singapore cloud region gave HyperGAI the capacity and GPU performance they needed to develop their family of foundation models. As a result, HyperGAI was able to release three HPT models in the first half of 2024 alone.

To deal with the large media files needed for multimodal model development, the speed and size of the storage network matter. HyperGAI credits success on this front to SMC’s extremely high-performance network file system. Based on WEKA, the distributed, parallel file system was written entirely from scratch to deliver the highest-performance file services using NVMe Flash. The highly scalable performance allowed HyperGAI to effortlessly saturate every node with the needed data during training.

By using SMC over legacy cloud options in Singapore, HyperGAI ultimately saved 29 tonnes of CO2 per month. With the perfect blend of performance and sustainability, SMC enabled HyperGAI to advance their research while adhering to its ethical and environmental standards.

State-of-the-art models

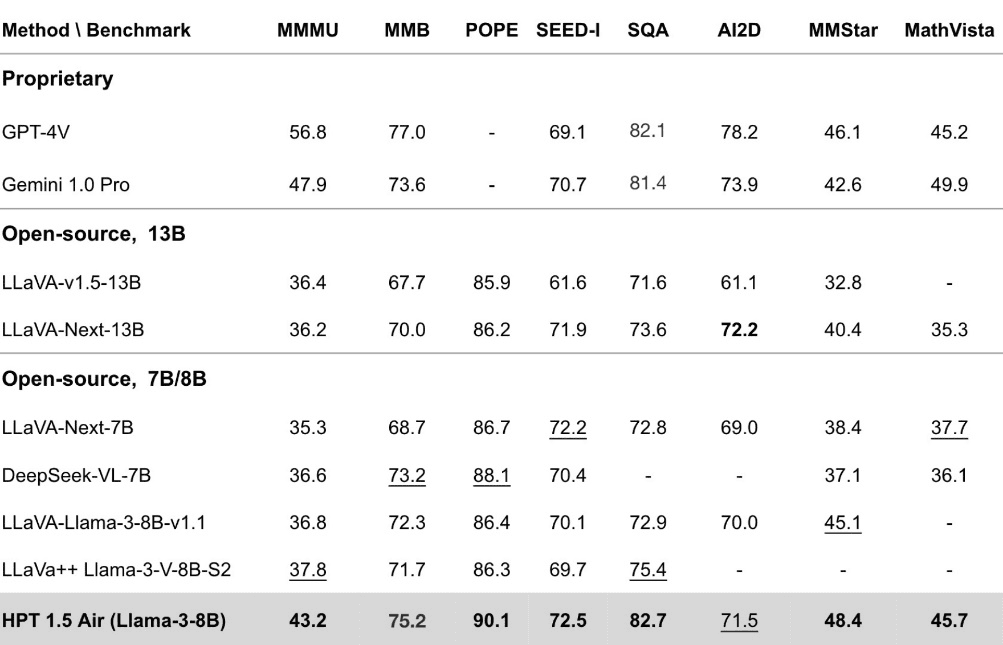

In March this year, HyperGAI came out of stealth mode and released two models: HPT Pro and HPT Air. In early May, HyperGAI released HPT 1.5 Air, which comes with an upgraded visual encoder and incorporates the just-released LLaMA 3 8B model, with training performed on an improved dataset.

HPT Pro outperforms GPT-4V and Gemini Pro on the MMBench and SEED-Image benchmarks. On its part, HPT 1.5 Air is arguably the best multimodal Llama 3 in the market today, outperforming bigger, proprietary models on multiple benchmarks including MMMU and POPE.

“ Collaborating with SMC and leveraging NVIDIA’s H100 GPUs has been pivotal for HyperGAI in advancing the frontier of Multimodal Generative AI technology, sustainably. ”

By turning to SMC for its computational infrastructure as a cloud service to meet the intensive demands of multimodal AI model training, HyperGAI was able to achieve its lofty objectives without the significant environmental toll typically associated with such endeavours.