6 March 2024

Industry Insights

Could next generation GPUs break the cloud? — ‘The pending catastrophe’

‘The pending catastrophe’ - a dramatic title to be sure! However, not something I can take credit for. This unusually bold statement was the title of a presentation given to my colleagues and I almost two years ago, from a major global server OEM, known for their leadership in the design and build of HPC systems.

In this case (it was a sales call by the OEM) - the vendor was focused on their direct chip (‘DLC’) liquid cooling solutions, and the title - ‘the pending catastrophe’ - was an attempt to educate their prospects (eg us) on just how little runway there is left for legacy data centre (DC) cooling infrastructure based on the high power requirements of CPU & GPU chips in development; how grossly unprepared on an infrastructure front the DC & cloud industry was; and the lack of solutions to address rising questions of sustainability in the DC & cloud space.

Based on the requirements of CPU & GPU chips in development, from an infrastructure front, the DC & cloud industry were grossly unprepared. It was clear to us all that there was a lack of solutions to address the rising question of sustainability in the DC & cloud space.

It was for these very reasons that we began our research and launched the technology project behind SMC, about five years before this sales presentation. We were creating a computing platform that would provide a generational leap in efficiency — and at the same time be able to deliver the benefits seamlessly to users, without a barrier to adoption.

Having this presentation come up, with our key themes being pitched back to us was ironic — it was, though, a seminal moment. We weren’t crazy. The industry was waking up to the problem looming dangerously close.

At that stage, we had refined over five iterations of the HyperCube — now SMC’s revolutionary hosting platform — and were already into large-scale (20MW) production testing, working closely with NVIDIA and our own OEMs on validating the computing fabric.

So, we kept pushing…

Enter ChatGPT: AI’s ‘iPhone moment’

On the 30th of November 2022, AI had its ‘iPhone moment’. So proclaimed Jensen Huang, who since 2012’s AlexNet CNN paper, had steadily pushed for the development of the GPU-accelerated AI industry, and the transformation of NVIDIA from a video game card maker into an AI powerhouse.

With ChatGPT, everything changed. Previously the domain of researchers and futurists, AI became suddenly available and comprehensible. Able to be used by anyone and everyone with just a few clicks in their browser, the whole world woke up to the promise of AI — sparking a technical revolution that is still just beginning to unfold.

The rapid ascent of artificial intelligence from a niche research interest to a cornerstone of global technological infrastructure is nothing short of remarkable. This surge is not just about smarter algorithms or more engaging chatbots; it's about fundamentally transforming how we live, work, and interact with the world around us. Yet, as we marvel at the potential of AI to drive unprecedented innovation and prosperity, a problem grows large.

“ ‘The pending catastrophe’ is not just around the corner. It is here, pulled forward by the monumental rise of demand for AI services, driven by an insatiable demand for power-hungry GPUs. ”

The catalysts of the catastrophe: Three megatrends

At SMC, akin to the phrase ‘pending catastrophe’, we have what we call, ‘megatrends’. Put simply, this is our observation of three key themes over the years. Previously independent, but headed towards an inevitable union that - as was our thesis - would upend the cloud, data centre and AI economies irrevocably and in unison:

- Demand for AI services and their underlying infrastructure

- Exponentially rising TDPs in GPUs and CPUs in the data centre

- Sustainability requirements finally becoming visible in the ICT & cloud industry, beyond mere greenwashing

The convergence of these themes is now directly shaping the combined data centre, cloud computing market and AI boom. As all three come crashing into the inevitable reality of the sustainability movement, the need to take genuine steps to mitigate this impact of climate change across all industries of the global economy is here.

1. The Demand for AI Services

A well-covered topic in the press and industry, no matter the source — there is strong consensus that AI is here to stay and on a steep growth path. AI at some stage in its life cycle, if not all the time, runs on and requires powerful GPU & CPU processors, especially in development. The demand for these servers, with their powerful CPU & GPU processors, has surged. These servers are hosted at large scale in cloud data centres around the world.

There are two main problems on hand here:

Air-cooled DCs: Most of the world’s data centres, either built or under construction, use refrigerated air to cool the servers. Air is a relatively inefficient conductor of heat. Additionally, these DC designs (the vast majority of the world’s) were never conceived with high-powered AI chips in mind. There is a mismatch between the power demanded by the AI chips and the cooling designed for it.

Growing TDPs: Already pushing legacy cloud data centres to breaking point, peak TDPs (Thermal Design Power or; the power and cooling requirements of CPU & GPU chips) are accelerating like never before, compounding the cooling requirements of the already inadequate global cloud data centre fleet.

2. Rising GPU & CPU temperatures

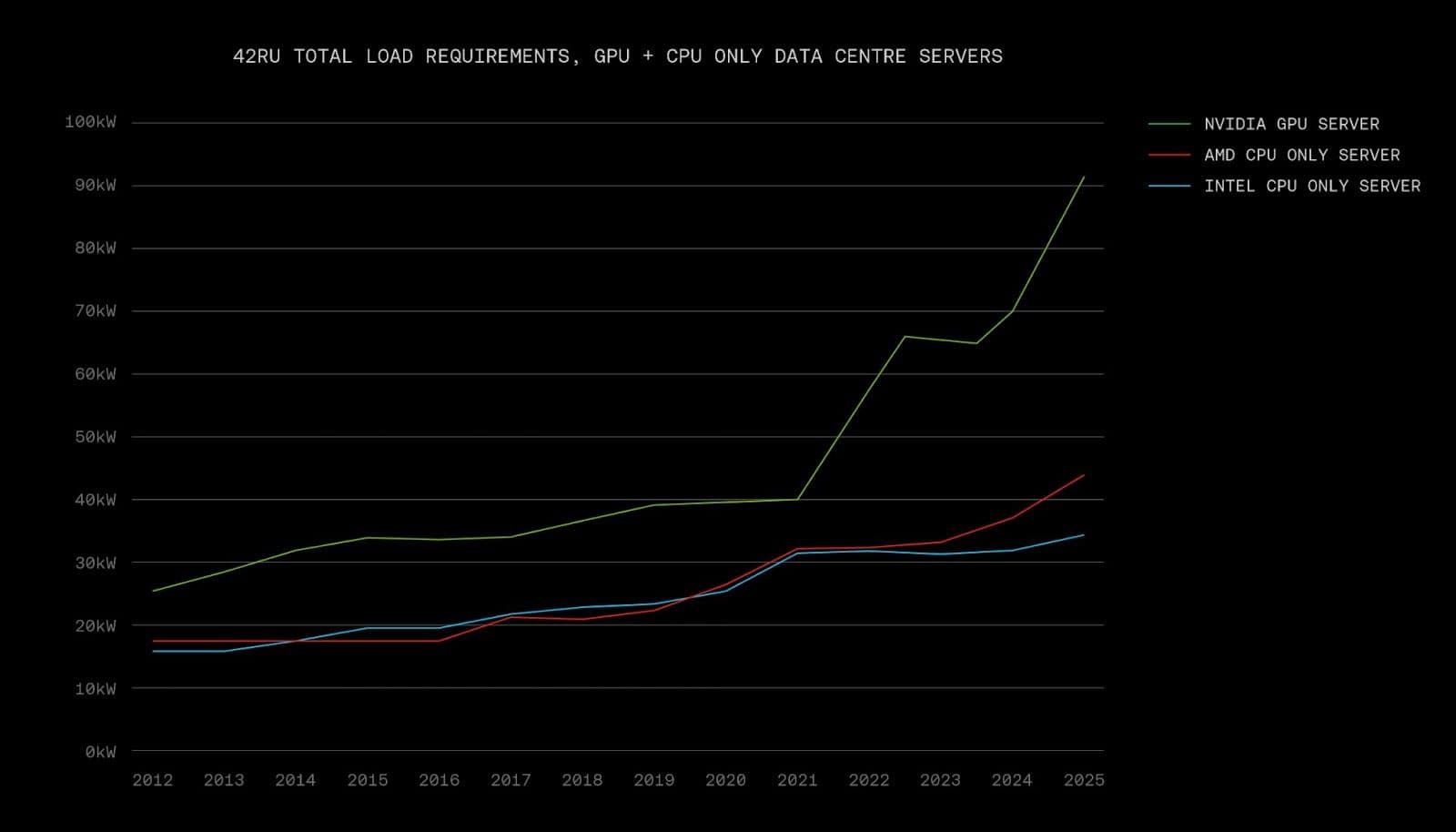

In 2020, only 3% of global cloud data centres (DCs) are equipped to accommodate rack densities greater than 50kW — a figure that barely scratches the surface of modern AI servers' requirements. Fuelled by the AI boom, and the rising demands of modern AI workloads, the chips within these servers are set to get hotter…much hotter.

Consider a current generation NVIDIA H100-based HGX server, which consumes as much as 10.5kW of power per 6RU, making even the most advanced IDCs struggle with rack density. Try filling a 42RU rack with 7 of these. What do you get? Over 70kW of power per rack. The number of DCs that can accommodate the cooling required for this are incredibly few and far between.

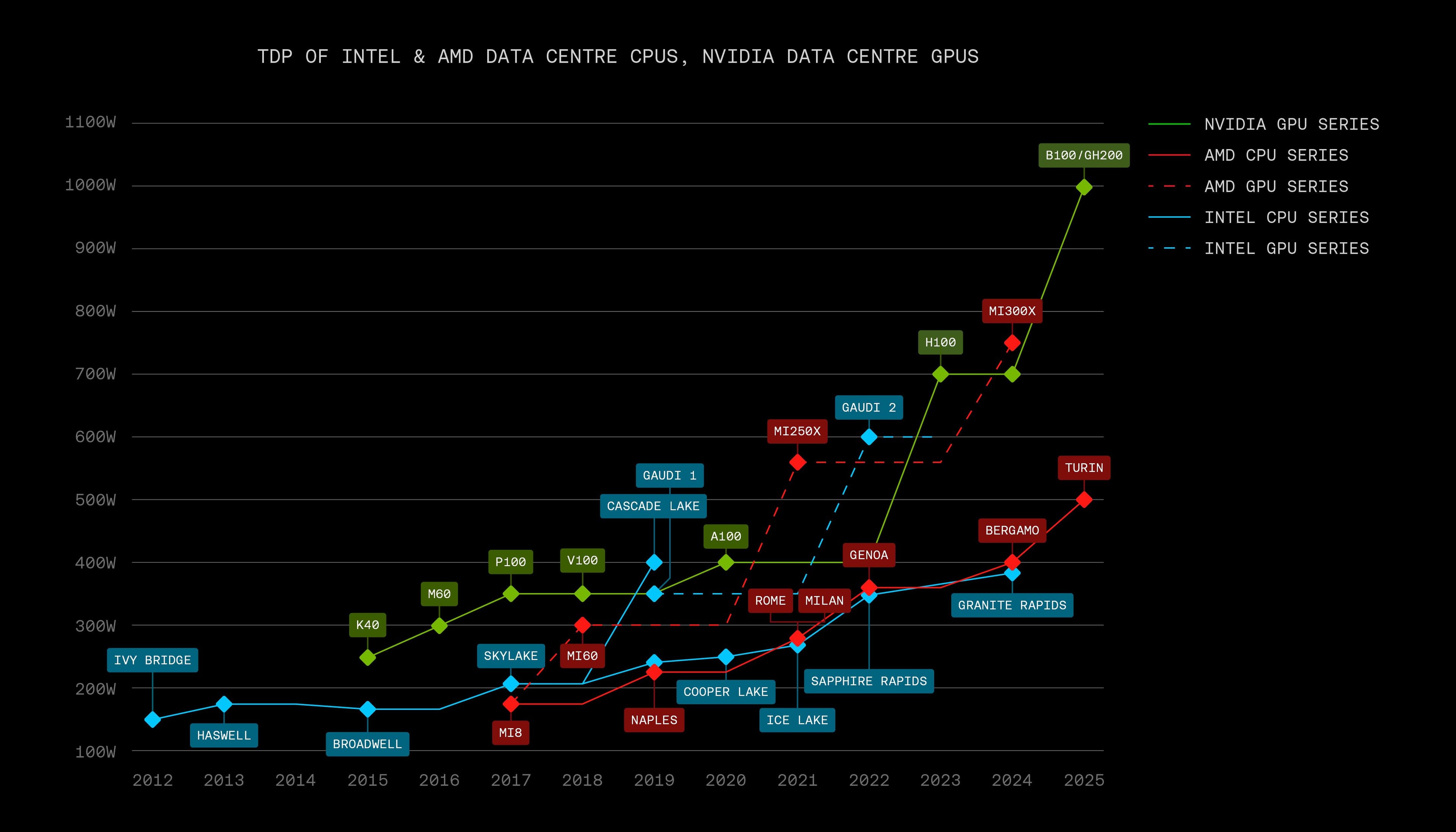

How did we get here, and is this just a niche problem? It started slow, but as we can see from the following chart, the pace is picking up. And, it’s not just GPUs that are the issue:

Here, we track the peak TDP of each generation of top-performing data centre processors. Intel in blue, AMD in red (solid for CPU, dashed for GPU), and NVIDIA GPUs in solid green. The most recent data points are based on leaks or roadmaps, commonly found online.

Looking ahead, NVIDIA's next-generation Blackwell architecture (B100) for deep learning is rumored to be designed around a 1,000w Thermal Design Power (TDP), exemplifying the industry's trajectory towards higher TDPs. This necessity for more robust cooling solutions underscores a broader challenge within the industry, as IDCs and their clients grapple with infrastructure quickly becoming obsolete.

Building a next-gen AI server in 2025? Pair 8 Blackwell GPUs at a possible 1kW each, with two AMD Turin CPUs at 500w, allow for system power and fans, and we have the makings of a 15kW server. When mapped to rack density, things get out of control, very quickly. A simulated Blackwell HGX server (air-cooled) could require as much as 80-90kW per rack.

Take note, this is only 2025 we’re accounting for. Extrapolate that growth line over a few years. 100kW racks are the new baseline, and with it, hundreds of billions of dollars invested into legacy DC infrastructure that faces early obsolescence.

3. Cloud, DC & AI industries: Sustainability legislation is coming

This final megatrend is the simplest and like the rise of AI, we would say, fairly undisputed. Legacy cloud infrastructure is already under heavy scrutiny for its climate change impact, with some estimates placing it as causing the same carbon output as the airline industry. The real problem for AI? Not only is it the fastest-growing workload in the cloud, but it also uses the most energy - and as we’ve already discussed - the confluence of rising chip temperatures and continued growth in demand means it is going to use a great deal more.

This is not going unnoticed. There has been a sharp rise in visibility on the matter and legislation is rapidly approaching, such as the proposed ‘AI Environmental Impacts ACT’ before the US Senate.

Let’s just focus on the AI industry for now:

Take IEEE’s recent research that estimates the total power required today to run AI workloads at 29.3 terawatt-hours annually. This figure mirrors the entire power consumption of Ireland or equates to around 1.6 million H100 GPUs. Hypothetically were this in Singapore (SMC’s HQ and home to two of our HyperCube-based AZs), such energy consumption would result in approximately 12.47 mega-tons of CO2 emissions annually.

“ To put this into perspective, that's akin to the pollution from over 2.7 million passenger vehicles on the road for a year, 635,000 US households, or the carbon-absorbing power of 577 million trees. The environmental impact is significant and far-reaching. ”

That is of course, with today’s demand, and today’s chips. What of 1,000w NVIDIA Blackwell chips or the next-generation AMD Instinct? What happens when the demand for AI continues to grow, regardless of the processor? As far as climate change is concerned, it’s all bad news.

Well, it's not entirely all bad news. Regional regulations are setting new standards to curb this trend. In Europe, the EU Energy Efficiency Act calls for transparency in energy reporting and PUE. Data centres operational before July 1, 2026, must meet a PUE of 1.5 by 2027 and 1.3 by 2030, while those starting afterwards need a PUE of 1.2, underscoring the pressing need for sustainable technology.

So, what is the industry’s solution to tackle this issue?

OpenAI’s Sam Altman calls for fast-tracking the development of nuclear fusion to solve the problem. Others advocate for building AI clusters only in regions powered solely by renewable energy. Both are ambitious and fantastic efforts, that should be pursued with more effort (certainly for renewables). However, neither of those solutions is capable of solving the problems that exist in the industry today, on a global scale.

Focusing on renewables is the closest, and there are a small handful of countries that have zero or close to zero CO₂-e/kWh energy grids, yet these are typically in remote regions, such as far northern Europe, and are few and far between. Issues like latency, data sovereignty and data gravity mean that despite the attractiveness of renewable-powered ‘AI hubs’ for the planet, such a concept is unlikely to be embraced by the vast majority of nations, enterprises and citizens who insist on increasing transparency on where and how their data is stored, processed and secured.

In reality, AI clusters will continue to be built in countries all around the world, as they have been to date. This means that for most of the developed world, these AI clusters will continue to be powered by fossil fuels — for many decades to come.

Solving the ‘megatrends’

How do you make AI infrastructure materially more affordable? How can it be scaled, quickly and in any environment? How do you prepare for what’s next, solving the mismatch of legacy infrastructure and modern GPUs? How to do this in a way that is materially more efficient and sustainable than anything that has come before?

SMC exists because of these very questions.

We found the answer and spent 10 years perfecting it. Breakthrough hosting technology - the HyperCube - purpose-built to be the perfect, most efficient process flow sheet for turning electrons (electricity) into FLOPs (computations).

A purpose-built, Sustainable AI Factory

One that cuts CO₂ emissions by up to 48%, and the cost to access AI infrastructure by over 70%. One built for true hyperscale, delivering its output consistently and reliably with concurrent maintainability, delivering energy savings in any region it is deployed. One that runs mega-clusters of NVIDIA SXM NVSwitch enabled GPUs, full-rail InfiniBand, with zero compromise performance.

The infrastructure that we developed

HyperCube, the backbone of SMC’s GPU cloud product is a true game changer. Up to 48% less energy to power HGX H100 workloads, a 4x smaller land footprint, and integrated into high-quality Tier III data centres, it is our perfect process flow sheet.

The medium employed by the HyperCube

Single-phase immersion cooling - to cool our GPU servers, means that we do away with fans inside the servers. The cooling system itself cleverly uses condenser water from existing cooling towers, whilst other innovations - like delivering DC power directly to each server via a bus, cuts e-waste and adds further efficiency. For more information on HyperCube, check out some of our other posts, and watch out for some upcoming technology deep dives.

We’ve already delivered H100-based instances powered by HyperCube in our Singapore AZ. And right now, we’re under construction or in advanced planning for deployments in India, Australia, Thailand and Europe.

In Singapore, specifically, customers using SMC to develop and deploy AI workloads are cutting their direct carbon footprint by that 48% figure, vs an air-cooled H100 SXM instance in a typical Singapore-based data centre.

And finally, how do we make this accessible?

As you can tell by now, there is a lot to unpack. Sustainable AI Factories that are built for true hyperscale, designed to cut energy use by nearly half - no matter what the workload. The technology is incredible, relevant, and needed.

However, the final hurdle is the delivery method.

“ How do we deliver sustainable, cost-effective AI computing to the world? ”

Sustainable Metal Cloud was our answer. Accessibility is about simplicity and adaptability.

- HyperCube technology, integrated within a global fleet of Tier III data centres.

- Enterprise compute platforms like our partner Dell Technologies’ PowerEdge XE9680 H100 SXM enabled servers, RDMA InfiniBand network fabrics and WEKA’s parallel file storage platform.

- A cloud native software platform, with open-source and enterprise-supported AI development tools like NVIDIA AI Enterprise to provide software developers and cloud engineers easy-to-use development environments

- All paired with industry-leading cost-to-use and TCO - an entire tip-to-tail AI cloud - delivering enterprise-ready platforms that are integrated into a foundation of sustainable technology.

This entire sustainable AI stack, end-to-end, is available with the click of a mouse, or a stroke of a keyboard.

Seamlessly enabling users to build Sustainable AI. Today.

As we stand at this crossroads, I see an opportunity not just to adapt, but to lead. It's about setting new standards, pushing the boundaries of what's achievable, and spearheading an industry-wide transformation towards sustainability and efficiency in the face of ever-increasing AI compute demands.

This journey requires collaboration, foresight, and a commitment to innovation that doesn't just respond to immediate needs but anticipates the future landscape of AI compute.

Our achievements in Singapore are just the beginning of a broader vision — a vision where technology and sustainability converge to create a new era of responsible, sustainable AI development.