23 May 2024

Industry Insights

Why liquid cooling is the future we need in data centres today

Data centres today underpin much of the global economy, supporting everything from cloud computing, and AI processing, to the powerful digital infrastructure necessary to support modern business operations and everyday online activities.

To cope with growing demand, increasingly powerful computing hardware is deployed. From increased core count to hyperconverged infrastructure, and then GPUs, these systems have entered data centres to drive faster processing and enable new capabilities.

Unsurprisingly, this surge in high-performance computing systems is putting immense pressure on data centre cooling systems.

At the limits of air cooling

For almost three decades now, traditional data centre cooling has largely relied on moving large volumes of chilled air through the data centre to remove heat generated by servers and other equipment. This method, while effective, is proving inadequate as power densities and heat loads continue to rise.

Some will argue that the data centre industry has been predicting high-density deployments for a long time now and that actual demand has always trailed behind the projections. There is credence to the scepticism; despite years of predictions of high-density workloads, Uptime Institute’s latest survey from 2023 pegged the average draw per rack at less than 6kW per rack globally.

Yet, there is no denying that the rapid adoption of AI is set to reshape the landscape with its insatiable demand for power-hungry computing. The NVIDIA DGX H100 server, for instance, requires up to 10.2kW of power to drive the eight H100 GPUs within each 8U chassis. And while five such servers can theoretically be installed in each 42U rack, real-world data centre deployments tend to entail as few as two servers per rack as data centre cooling struggles to keep up.

The situation will only worsen as the next generation of GPUs such as NVIDIA's Blackwell Platform is released before the end of this year. These GPUs will draw substantially more power – up to 1,000 watts per B200 GPU compared to the H100’s 700 watts.

Liquid cooling in the data centre

As data centres get hotter, liquid cooling systems with their superior heat capacity and thermal conductivity provide a more efficient solution compared to traditional air cooling methods. Indeed, liquid cooling is considered by experts to be highly beneficial for power utilisation of 40kW to 60kW per rack in the data centre, and mandatory with 80kW racks and beyond.

The other inherent benefit to liquid cooling is the removal, or at least a reduction, of fans.

“ This alone could bring down overall power consumption by as much as 25%. ”

While some of this is offset by the use of pumps, the savings are indisputable.

There are several liquid cooling approaches in use today:

- The simplest way to deploy liquid cooling is probably through the deployment of a passive or active rear door heat exchanger, with chilled water piped right to the back of each server rack.

- In direct-to-chip (DTC) cooling, the liquid coolant is piped directly to cold plates attached to CPUs or GPUs, drawing away the heat and keeping these components cool.

- In immersion cooling, the entire server is submerged in a dielectric, thermally conductive liquid fluid to dissipate heat.

By effectively removing heat from servers, liquid cooling systems enable data centres to support more powerful systems while also making it possible to pack more of them into a smaller footprint.

Increasing energy efficiency

But liquid cooling isn’t just about the ability to support the deployment of next-gen systems. Around the world, nations, corporations, and individuals are doing all they can to secure a healthy and equitable planet for present and future generations. A key strategy is through the reduction of carbon emissions and energy consumption.

Within the data centre, liquid cooling offers a unique opportunity to significantly reduce energy consumption and the environmental impact of the same workload by simply switching from air cooling to liquid cooling. This shift not only reduces operational costs in a time of soaring energy prices but also paves the way to a more sustainable future for all.

The time for it has never been more urgent; data from the Uptime Institute shows that Power Usage Effectiveness, or PUE, has flattened out and even rebounded slightly. As more data centres are built and power consumption increases to accommodate the growing demand for AI, the urgency to increase energy efficiency is greater than ever. After all, data centre deployments are rarely upgraded once operational, and optimising their efficiency today is paramount for long-term sustainability.

Towards a green AI revolution

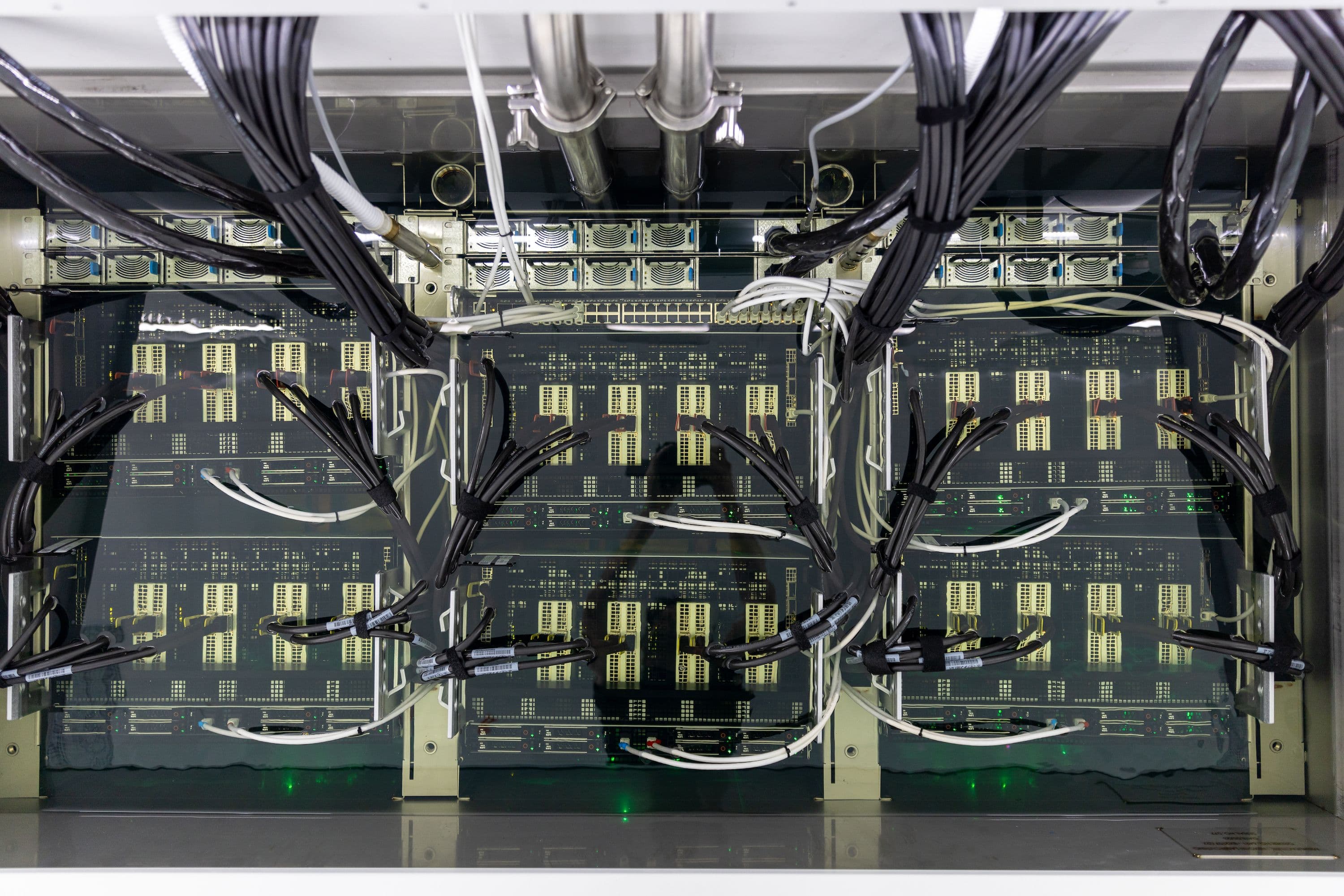

At SMC, we have gone all-in with single-phase immersion cooling using a non-fluorocarbon-based, non-volatile dielectric fluid that has been usable for over 15 years. We have also adopted an end-to-end strategy to design the SMC HyperCube, a unique immersion cooling system with multiple interconnected tanks.

Unlike the standalone tanks and multiple pumps commonly used in traditional immersion cooling solutions, our multi-tank system uses a single pump (2N configuration) to recirculate the fluid through each cluster for maximum efficiency.

By redesigning every element of this infrastructure, we were able to increase cluster density by four times and lower energy use by half. This means our availability zones use up to half the energy as legacy GPU clouds, with a standalone PUE of less than 1.03. This is a generation ahead of everything else commercially available today.

HyperCube deployments are currently live in two data centres in Singapore:

As a greenfield deployment across an entire level (STT Singapore 6) and as an edge deployment retrofitted into an underground car park of an existing data centre (STT MediaHub).

Our technology lets us provide sustainable AI infrastructure that dramatically decreases energy use and carbon emissions to power a green AI revolution. Our efforts to date have allowed us to bring genuine, breakthrough sustainable infrastructure to enable AI workloads at scale.

We are not done yet. Effort continues with research and development to support even higher-powered GPUs such as NVIDIA’s upcoming B200 GPUs and even faster networking technologies in an immersion environment. If there is one thing we are certain of, it would be that liquid cooling is the future of data centre cooling.

A future that is now available.